Basic Library Generation¶

Now that we have a basic understanding of how to use Synthesizer to create a galaxy object, we can move on to generating a library of galaxies. This is done using the GalaxyBasis class within Synference, which takes a set of parameters and generates a library of galaxies based on those parameters.

In the simplest scenario, you define the parameter space you want to sample outside of the GalaxyBasis object. You then initialize the GalaxyBasis using these arrays.

We provide helper functions to generate these arrays for you, but you have complete flexibility to define your own, more complex parameter spaces if you wish.

Drawing Samples¶

[1]:

%load_ext autoreload

%autoreload 2

from matplotlib import pyplot as plt

from synference import GalaxyBasis

/opt/hostedtoolcache/Python/3.10.19/x64/lib/python3.10/site-packages/tqdm/auto.py:21: TqdmWarning: IProgress not found. Please update jupyter and ipywidgets. See https://ipywidgets.readthedocs.io/en/stable/user_install.html

from .autonotebook import tqdm as notebook_tqdm

These arrays can be generated using the draw_from_hypercube function, which takes as input the number of samples you want to draw, and a dictionary of parameter ranges. In this dictionary, the key is the parameter range, and the value is a tuple defining the minimum and maximum value for that parameter. The function will then return a dictionary of arrays, where each array contains the sampled values for each parameter. Other sampling schemes can be used (e.g. a Sobol

sequence)

By default, the draw_from_hypercube function uses a form of low-discrepancy sampling called Latin Hypercube Sampling (LHS). LHS is highly efficient compared to random sampling, especially when dealing with many parameters, as it ensures that the parameter space is sampled more evenly.

The dictionary we must define encapsulates our prior knowledge about the parameter space we intend to sample.

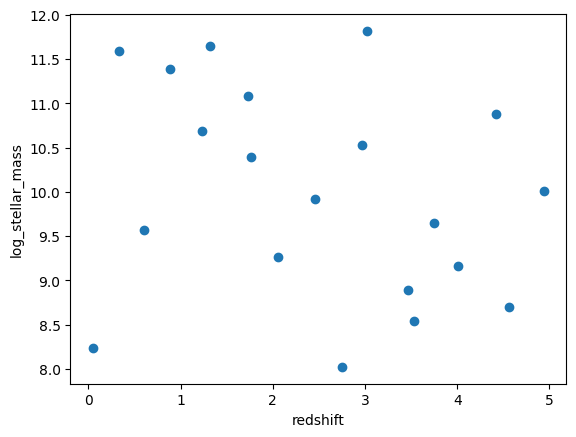

For this example, we will define a parameter space with two parameters: redshift and log_stellar_mass. The default sampling method is uniform sampling between the upper and lower bounds provided, but you can draw from any distribution you like by defining the arrays yourself.

[2]:

from synference import draw_from_hypercube

param_ranges = {"redshift": (0.0, 5.0), "log_stellar_mass": (8.0, 12.0)}

Above we have defined a parameter space with two parameters: redshift and log_stellar_mass. We have defined the ranges for these parameters as (0.0, 5.0) and (8.0, 12.0) respectively. We then use the draw_from_hypercube function to draw 100 samples from this parameter space, which returns a dictionary of arrays containing the values for each parameter.

[3]:

param_grid = draw_from_hypercube(param_ranges, 20, rng=42)

If we plot these values, we can see that the parameter space is sampled more evenly than if we had used random sampling.

[4]:

plt.scatter(param_grid["redshift"], param_grid["log_stellar_mass"])

plt.xlabel("redshift")

plt.ylabel("log_stellar_mass")

[4]:

Text(0, 0.5, 'log_stellar_mass')

For this basic tutorial, we will define a 6 dimensional parameter space four our library of galaxies. We will draw 10,000 samples—a size chosen for quick demonstration, though a real, useful library would require significantly more samples—to construct our synthetic galaxy population.

Our six parameters are:

redshift: The redshift of the galaxylog_stellar_mass: The logarithm (base 10) of the stellar mass in units of solar masslog_zmet: The logarithm (base 10) of the stellar metallicitypeak_age: The age corresponding to the peak of the log-normal star formation history (SFH)tau: The dimensionless width of the log-normal SFHtau_v: The V-band optical depth

[5]:

Ngal = 100

param_dict = {

"redshift": (0, 5),

"log_stellar_mass": (8, 12),

"log_zmet": (-4.0, -1.4),

"peak_age_norm": (0.0, 0.99), # fraction of the age of the universe

"tau": (0.1, 2.0), # dex

"tau_v": (0.0, 3.0), # magnitudes

}

params = draw_from_hypercube(param_dict, Ngal, rng=42)

Generating SFH Distributions¶

In the previous tutorial we saw how to generate a single SFH class instance representing a Constant star formation history. However, in many cases we may want to generate a library of star formation histories that represent a range of different galaxy types. Whilst this can be done entirely manually, synference provides a convenient helper function to generate a library of star formation histories based on a set of parameters.

[6]:

from synthesizer.parametric import SFH

from synference import generate_sfh_basis

The generate_sfh_basis function is used to generate an array of SFH objects from our sampled parameter arrays.

To do this, we pass the function the desired SFH class (e.g. SFH.LogNormal) and the dictionary of sampled arrays. The function then returns an array of instantiated SFH instances, where the paramaters for each object are drawn from the arrays we provided. We also require that you provide parameter units using unyt Units (e.g. Myr for age), which ensures that the parameters are correctly interpreted by the SFH class.

Optionally, we can define a redshift dependent star formation history. To this, designate a parameter which will be scaled by the available lookback time at the redshift of the galaxy. This is useful for parameters which are physically constrained by the age of the universe, such as the peak age of a log-normal SFH.

[7]:

sfh_models, _ = generate_sfh_basis(

sfh_type=SFH.LogNormal,

sfh_param_names=["peak_age_norm", "tau"],

sfh_param_arrays=[params["peak_age_norm"], params["tau"]],

sfh_param_units=[None, None],

redshifts=params["redshift"],

)

print(len(sfh_models), type(sfh_models[0]))

Creating SFHs: 100it [00:00, 1453.13it/s]

100 <class 'synthesizer.parametric.sf_hist.LogNormal'>

We can see that the generate_sfh_basis function has returned an array of 1000 SFH.LogNormal instances, each with the parameters drawn from the arrays we provided.

Generating Metallicity Distributions¶

In the same manner that we can generate a library of star formation histories, we can also generate a library of metallicity histories. While we don’t provide a helper function for this, these are simpler to generate:

[8]:

from synthesizer.parametric import ZDist

zdists = []

for z in params["log_zmet"]:

zdists.append(ZDist.DeltaConstant(log10metallicity=z))

print(len(zdists), zdists[0])

100 ----------

SUMMARY OF PARAMETERISED METAL ENRICHMENT HISTORY

<class 'synthesizer.parametric.metal_dist.DeltaConstant'>

metallicity: None

log10metallicity: -3.13879132270813

----------

The above code loops over the metallicities drawn from the prior samples. For each metallicity value, it instantiates the metallicity class, and append the result to a list.

Setting up the rest of our Synthesizer model¶

Grid¶

We must load in the SPS grid we will use to generate our stellar and nebular spectra. As we will be generating a lot of spectra, we may also wish to set the wavelength array defined for the grid, to limit the resolution and min/max limits to save storage space and processing time. This can be set on creating a Grid by passing a new_lam argument with a new wavelength array, onto which the grid will be interpolated using a flux-conserving interpolation. We have a helper method to generate

a new wavelength array with constant R between two wavelengths.

Here we are using a BPASS SPS grid with a Chabrier 2003 IMF, which has been post-processed with Cloudy 23.01.

[9]:

from synthesizer.grid import Grid

from unyt import Angstrom

from synference import generate_constant_R

new_lam = generate_constant_R(R=300, start=1 * Angstrom, stop=50_000 * Angstrom)

grid = Grid("test_grid", new_lam=new_lam)

Emission Models¶

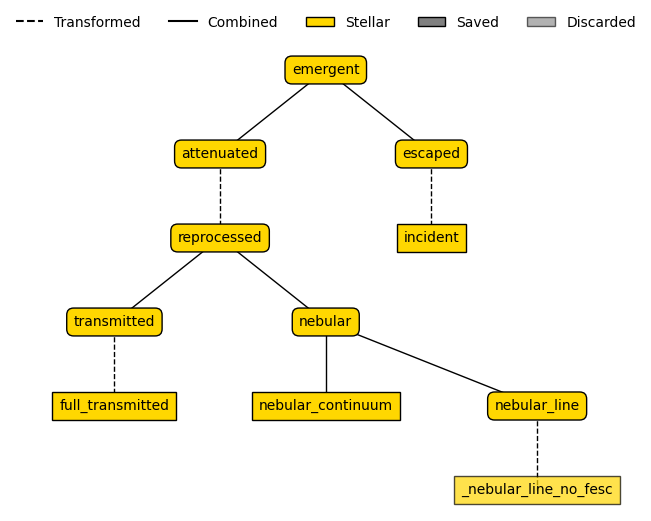

If you’ve already read the previous tutorial, you’ll remember that emission models are crucial for defining the process by which we generate observables of our galaxies. The GalaxyBasis class takes a single EmissionModel instance which is used for all galaxy generation.

In this example, as we have defined our model with a dust component (tau_v), we will use a TotalEmission emission model.

[10]:

from synthesizer.emission_models import TotalEmission

from synthesizer.emission_models.attenuation import PowerLaw

emission_model = TotalEmission(

grid=grid,

dust_curve=PowerLaw(slope=-0.7),

)

emission_model.plot_emission_tree()

[10]:

(<Figure size 600x600 with 1 Axes>, <Axes: >)

[11]:

emission_model._models["attenuated"].__dict__

[11]:

{'label': 'attenuated',

'_lam': array([1.00000000e+00, 1.00166667e+00, 1.00333611e+00, ...,

8.97092337e+05, 8.98587491e+05, 9.00085136e+05], shape=(8234,)),

'fixed_parameters': {},

'_emitter': 'stellar',

'_per_particle': False,

'masks': [],

'_lam_mask': None,

'_is_extracting': False,

'_is_combining': False,

'_is_transforming': True,

'_is_generating': False,

'_transformer': <synthesizer.emission_models.transformers.dust_attenuation.PowerLaw at 0x7f737bf3f250>,

'_apply_to': <synthesizer.emission_models.stellar.models.ReprocessedEmission at 0x7f737bddd870>,

'_apply_to_label': 'reprocessed',

'_children': {<synthesizer.emission_models.stellar.models.ReprocessedEmission at 0x7f737bddd870>},

'_parents': {<synthesizer.emission_models.stellar.models.EmergentEmission at 0x7f737bddf070>},

'_scale_by': (),

'_post_processing': (),

'_energy_balance_models': None,

'_scaler_model': None,

'related_models': {<synthesizer.emission_models.base_model.StellarEmissionModel at 0x7f737bddf0d0>},

'_save': True,

'_extract_keys': {'full_transmitted': 'transmitted',

'nebular_continuum': 'nebular_continuum',

'_nebular_line_no_fesc': 'linecont',

'incident': 'incident'},

'_models': {'attenuated': <synthesizer.emission_models.models.AttenuatedEmission at 0x7f737bddf9a0>,

'reprocessed': <synthesizer.emission_models.stellar.models.ReprocessedEmission at 0x7f737bddd870>,

'transmitted': <synthesizer.emission_models.stellar.models.TransmittedEmissionWithEscaped at 0x7f737bddf610>,

'full_transmitted': <synthesizer.emission_models.base_model.StellarEmissionModel at 0x7f737bddd810>,

'nebular': <synthesizer.emission_models.stellar.models.NebularEmission at 0x7f737bddd7e0>,

'nebular_continuum': <synthesizer.emission_models.stellar.models.NebularContinuumEmission at 0x7f737bddd840>,

'nebular_line': <synthesizer.emission_models.stellar.models.NebularLineEmission at 0x7f737c0c38e0>,

'_nebular_line_no_fesc': <synthesizer.emission_models.base_model.StellarEmissionModel at 0x7f737bf5fca0>,

'escaped': <synthesizer.emission_models.base_model.StellarEmissionModel at 0x7f737bddf0d0>,

'incident': <synthesizer.emission_models.stellar.models.IncidentEmission at 0x7f737bf5f820>},

'_bottom_to_top': ['transmitted',

'nebular_line',

'nebular',

'reprocessed',

'attenuated',

'escaped']}

You’ll notice that above, unlike in the first tutorial, we didn’t set the optical depth tau_v directy on the emission model. This is because we want it to vary between galaxies, so we will instead pass this attribute to GalaxyBasis to set it on the individual Galaxy level.

Instruments and Filters¶

Finally, the last thing we have to define is what we actually want to observe. We could simply call get_spectra to get the rest-frame and observed-frame spectra on the wavelengths of the Grid.

But what if we want observations in specific photometric filters, or matched to the resolution of a specfic spectroscopic instrument? We must create and define an Instrument, which contains this information.

Here we will load a predefined NIRCam instrument containing its wideband photometric filters.

[12]:

from synthesizer.instruments import JWSTNIRCamWide

instrument = JWSTNIRCamWide()

Putting it all together - Generating our Library¶

Now that we have all the constituent components of our model we can finally put it all together and generate our library of observables. Firstly we simply instantiate a GalaxyBasis object, passing in the various components we have created above.

We must also define a galaxy_params dictionary, which contains any arguments we wish to set on the individual Galaxy objects, rather than globally on the emission model.

In this case, we set the V-band optical depth (tau_v) for each galaxy. However, this dictionary can include any number of emission model parameters intended to vary per galaxy, star or black hole instance.

We are also explicitly telling the code to ignore the max_age parameter. While max_age varies per galaxy, it is redundant because it is already a defined transformation of the redshift, meaning its value can be calculated from another parameter already present in our set.

We give this model a name, ‘testing_model’, which will be used when saving the output files.

[13]:

galaxy_params = {"tau_v": params["tau_v"]}

galaxy_basis = GalaxyBasis(

grid=grid,

model_name="testing_model",

redshifts=params["redshift"],

emission_model=emission_model,

instrument=instrument,

sfhs=sfh_models,

metal_dists=zdists,

log_stellar_masses=params["log_stellar_mass"],

galaxy_params=galaxy_params,

params_to_ignore=["max_age"],

)

2025-11-13 20:02:47,638 | synference | INFO | Generating library directly from provided parameter samples.

Now that we have created an instance of the GalaxyBasis, we can run the library creation using the create_mock_library method. Here we set the output model name, which emission model key to generate the library from (by default total), as well as setting optional configuration parameters. We can see for our above emission model that the root key is emergent, so we will set that here. We could also change the output folder, which will otherwise default to the internal ‘libraries/’

folder where the synference code is installed.

The create_mock_library method utilizes Synthesizer’s built-in Pipeline functionality, which handles processing the galaxies in batches and supports parallel execution if Synthesizer has been installed with OpenMP support (see the Synthesizer installation documentation for more information). This significantly speeds up galaxy library creation, making it scalable across cores and nodes for generating very large samples.

We also save the max_age parameter, which is a derived parameter based on the redshift of the galaxy. This is useful for later analysis so we can easily reconstruct the original parameter space.

[14]:

def max_age(x):

"""Compute the maximum age of the universe at a given redshift."""

from astropy.cosmology import Planck18 as cosmo

from unyt import Myr

return cosmo.age(x["redshift"]).to_value("Myr") * Myr

param_transforms = {"max_age": ("max_age", max_age)}

[15]:

galaxy_basis.create_mock_library(

"test_model_library",

emission_model_key="emergent",

overwrite=True,

parameter_transforms_to_save=param_transforms,

n_proc=1,

)

2025-11-13 20:02:47,763 | synference | WARNING | Creating output directory /opt/hostedtoolcache/Python/3.10.19/x64/lib/python3.10/libraries.

2025-11-13 20:02:47,765 | synference | INFO | Checking parameters inside create_matched_galaxies.

2025-11-13 20:02:47,767 | synference | INFO | 100

Preparing galaxy batches: 100%|██████████| 100/100 [00:00<00:00, 276669.13it/s]

Creating galaxies: 100%|██████████| 1/1 [00:01<00:00, 1.67s/it]

2025-11-13 20:02:49,451 | synference | INFO | Created 100 galaxies.

Processing parameters: 100%|██████████| 7/7 [00:00<00:00, 8785.20it/s]

2025-11-13 20:02:49,459 | synference | INFO | Finished creating galaxies.

2025-11-13 20:02:49,460 | synference | INFO | Created 100 galaxies for base testing_model

2025-11-13 20:02:49,462 | synference | INFO | Creating pipeline.

2025-11-13 20:02:49,462 | synference | INFO | Processing all galaxies in a single batch.

2025-11-13 20:02:49,463 | synference | INFO | Running in single-node mode.

2025-11-13 20:02:49,464 | synference | INFO | Added analysis functions to pipeline.

2025-11-13 20:02:49,486 | synference | INFO | Running pipeline at 2025-11-13 20:02:49.486117 for 100 galaxies

2025-11-13 20:02:51,697 | synference | INFO | Finished running pipeline at 2025-11-13 20:02:51.697819 for 100 galaxies

2025-11-13 20:02:51,698 | synference | INFO | Pipeline took 0:00:02.211695 to run.

2025-11-13 20:02:51,971 | synference | INFO | Written pipeline to disk at /opt/hostedtoolcache/Python/3.10.19/x64/lib/python3.10/libraries/testing_model.hdf5.

2025-11-13 20:02:52,301 | synference | INFO | Compiling the library after processing bases.

2025-11-13 20:02:52,302 | synference | INFO | Emission model key for base testing_model:emergent

Loading galaxy properties: 1it [00:00, 77.13it/s]

2025-11-13 20:02:52,330 | synference | INFO | Combined outputs shape: (8, 100)

2025-11-13 20:02:52,331 | synference | INFO | Combined parameters shape: (6, 100)

2025-11-13 20:02:52,331 | synference | INFO | Combined supplementary parameters shape: (0, 100)

2025-11-13 20:02:52,332 | synference | INFO | Filter codes: ['JWST/NIRCam.F070W', 'JWST/NIRCam.F090W', 'JWST/NIRCam.F115W', 'JWST/NIRCam.F150W', 'JWST/NIRCam.F200W', 'JWST/NIRCam.F277W', 'JWST/NIRCam.F356W', 'JWST/NIRCam.F444W']

2025-11-13 20:02:52,333 | synference | INFO | Parameter names: ['redshift', 'log_mass', 'tau_v', 'tau', 'peak_age', 'log10metallicity']

2025-11-13 20:02:52,333 | synference | INFO | Parameter units: ['dimensionless', 'log10_Msun', 'mag', 'dimensionless', 'Myr', 'log10(Zmet)']

2025-11-13 20:02:52,365 | synference | INFO | Processed the bases and saved the output.

[15]:

<synference.library.CombinedBasis at 0x7f737bf5f3d0>

The above code created a lot of output, but we can break it down. In order, it:

Created the individual galaxies, setting the attributes as we described.

Instantiated a

Pipelineand passed in the array of galaxies.Ran the pipeline for the galaxies to generate the observed spectroscopy and photometry, which is stored in a HDF5 file.

Loaded the saved HDF5 file, extracted the photometry for our emission key ‘emergent’, and generated the photometry and input parameter libraries.

Saved these separate libraries into a different HDF5 file, which will be used by the code later.

Inspecting our Library¶

There are numerous packages for inspecting HDF5 files, including the h5py package, which you have installed if you have run the code to this point without crashing. For more visual views, we recommend H5Web, which has a VS Code extension, or the command line interface h5forest, which you can find on Github here.

Below we use the h5py library to inspect the structure and contents of our saved dataset:

[16]:

import h5py

from synference import library_folder

with h5py.File(f"{library_folder}/test_model_library.hdf5") as f:

for dataset in f:

print(f"- {dataset}")

for array in f[dataset]:

print(f" - {array}")

if isinstance(f[dataset][array], h5py.Dataset):

print(f" - Dataset shape: {f[dataset][array].shape}")

elif isinstance(f[dataset][array], h5py.Group):

print(f" - Group: {list(f[dataset][array].keys())}")

- Grid

- Parameters

- Dataset shape: (6, 100)

- Photometry

- Dataset shape: (8, 100)

- SupplementaryParameters

- Dataset shape: (0, 100)

- Model

- EmissionModel

- Group: []

- Instrument

- Group: ['Filters', 'Resolution']

- Transforms

- Group: ['max_age']

Our HDF5 file contains the following key components:

Parameters array: Contains the input samples for all 6 parameters we defined for our prior (mass, redshift, tau_v, metallicity, peak_age, tau) with 100 draws from the prior for each parameter

Photometry array: Our synthetic observables consisting of 8 photometric fluxes (corresponding to the 8 NIRCam widebands) calculated for each of the 100 galaxy draws

Supplementary parameters array: An empty supplementary parameters array in this case, designed to store optional derived quantities such as star formation rates, or the surviving stellar mass

Model Group: This stores information about the emission model and instrument used, allowing us recreate the emission model and instrument later if we need to

It’s worth noting that the only required arrays are the ‘parameters’ and ‘photometry’ datasets. So you can entirely avoid using Synthesizer and build models externally using your code and method of choice, as long as you can produde a HDF5 array with the same simple format you will be able to use the SBI functionality of Synference with your code. Please see this example, where we train a model from the outputs of the hydrodynamical simulation SPHINX.

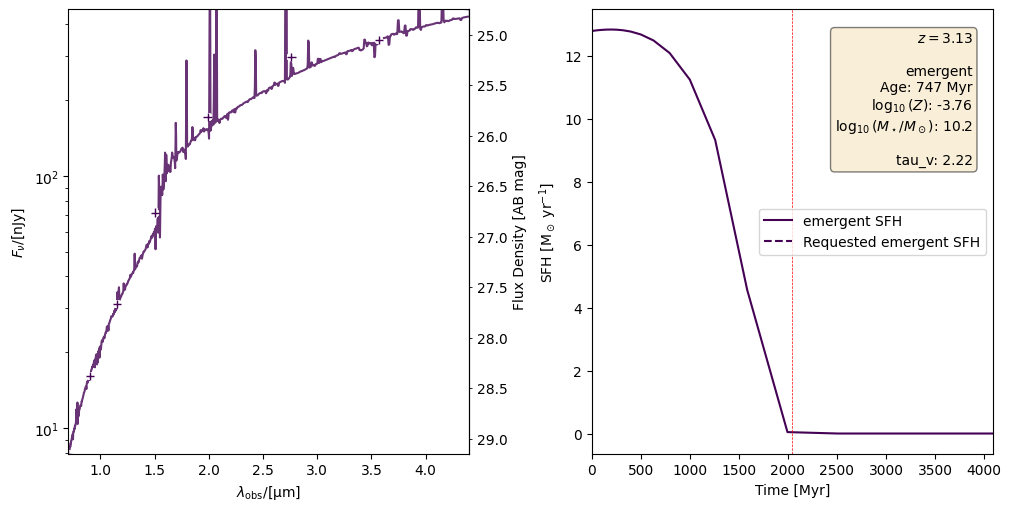

Plotting a galaxy from our model¶

Synference has some debug methods to plot specific or random individual galaxy SEDs, photometry and star formation histories - plot_galaxy and plot_random_galaxy. Below we plot a random galaxy from the model.

[17]:

galaxy_basis.plot_random_galaxy(masses=params["log_stellar_mass"], emission_model_keys=["emergent"])

[17]: